BIG DATA TESTING

Big data testing is the process of verifying and validating large volumes of complex data in big data systems. It ensures data accuracy, consistency, and completeness while assessing data processing and storage mechanisms. Testers use specialized tools to detect anomalies and evaluate data transformation and integration. Performance testing is crucial to ensure scalability and efficient handling of massive data loads. Security and compliance testing safeguard sensitive data and ensure regulatory compliance. This testing is essential for organizations to maintain data quality, make informed decisions, and derive valuable insights from their big data solutions, contributing to overall business success

FUNDAMENTALS OF BIG DATA TESTING

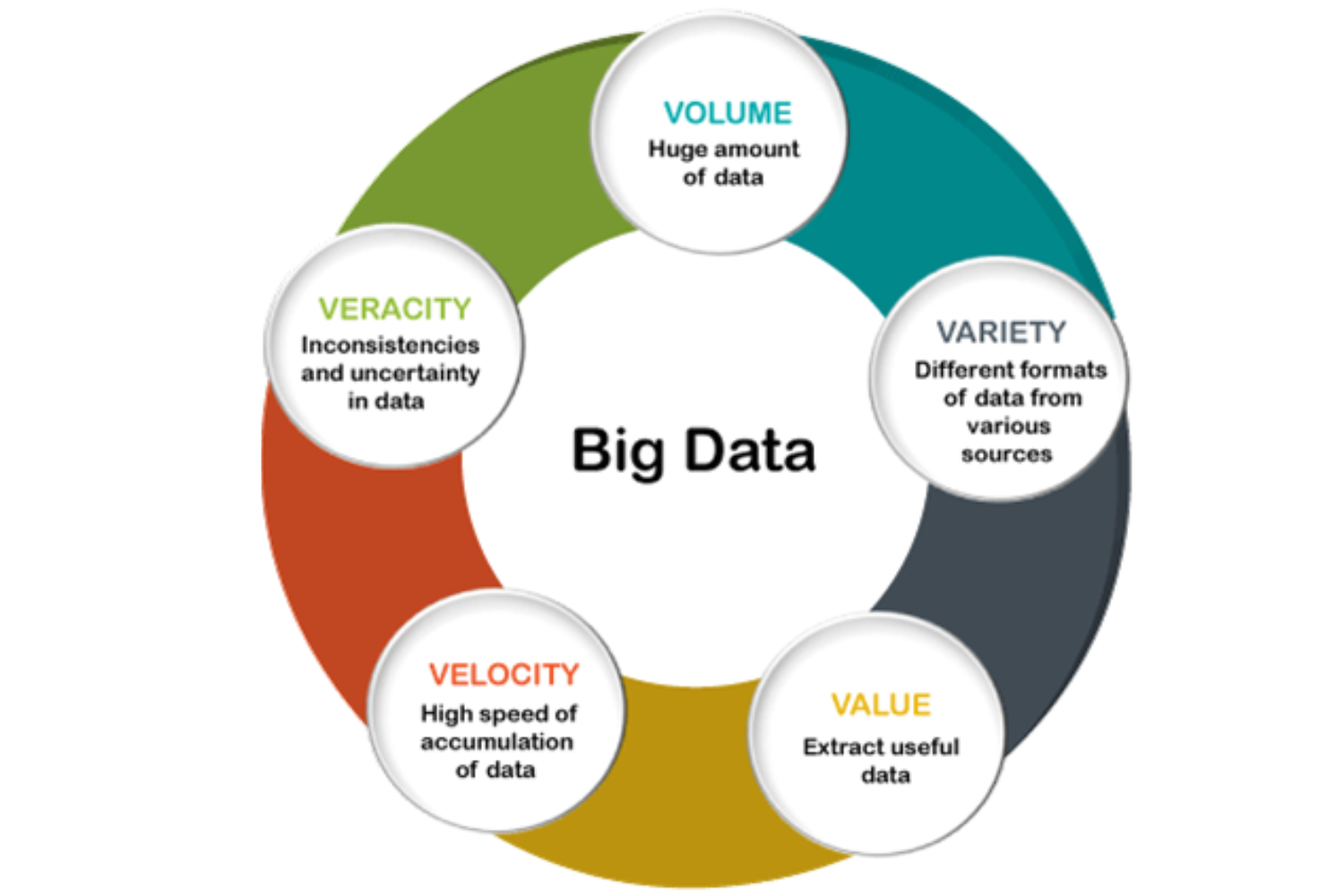

Big data refers to the vast and diverse volume of data that exceeds the processing capabilities of traditional databases. The fundamentals of big data can be summarized as the “Three Vs”:

Volume: Big data involves handling massive amounts of data, often ranging from terabytes to petabytes or more, which can come from various sources like sensors, social media, and transaction records.

Velocity: Data is generated and collected at high speeds, often in real-time. This requires efficient data processing and analysis to keep up with the continuous influx of information.

Variety: Big data encompasses structured and unstructured data, including text, images, video, and more. This diversity necessitates flexible storage and processing methods.

In addition to the Three Vs, “Variability” and “Veracity” are sometimes added, highlighting the dynamic nature and quality of big data. Effectively managing and extracting insights from big data involves advanced technologies and analytics to make data-driven decisions and gain a competitive edge.

TYPES OF BIG DATA TESTING

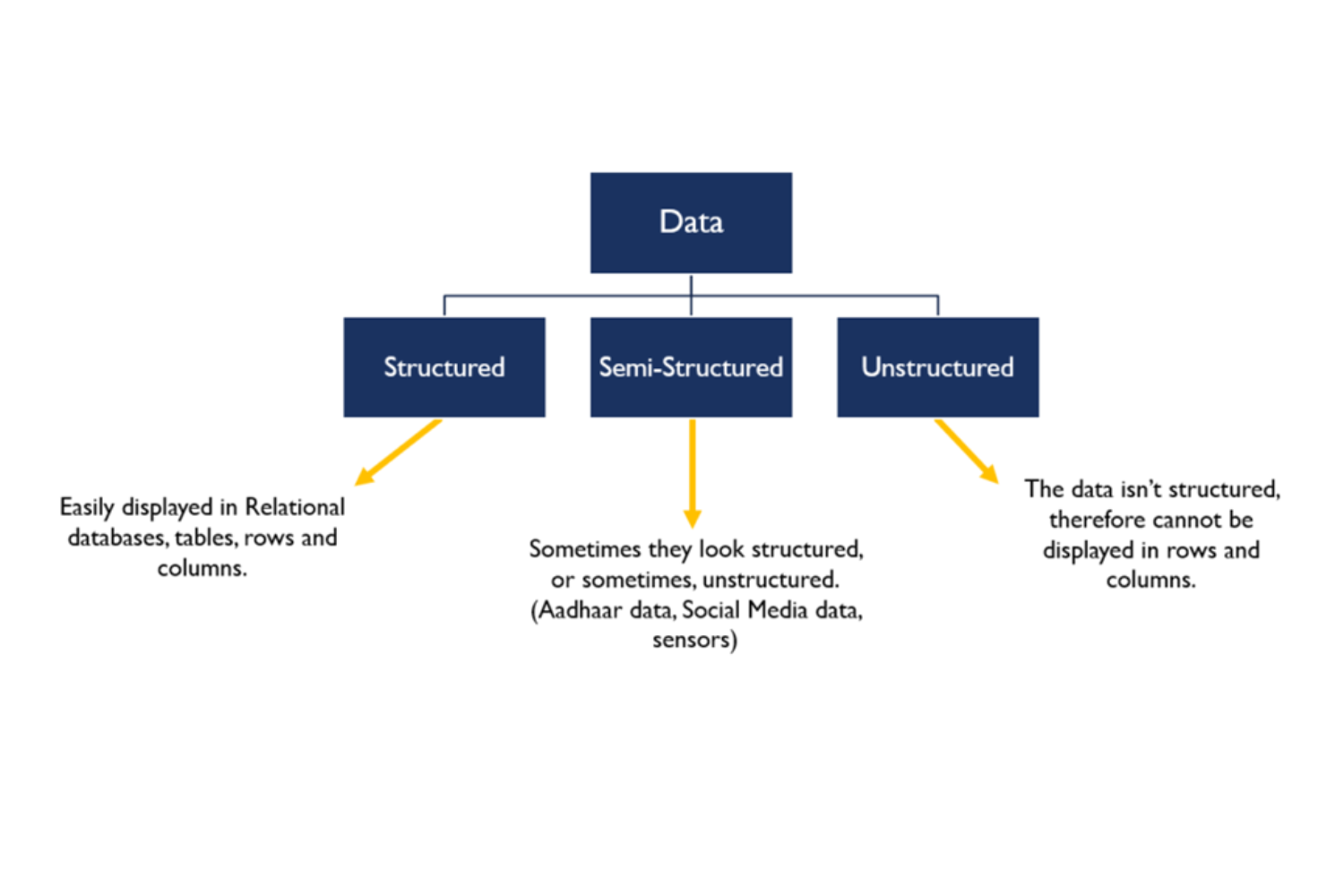

Big data can be categorized into three primary types:

Structured Data: This type includes well-organized, tabular data with a clear format, such as databases, spreadsheets, and financial records. It’s highly suitable for relational database management systems.

Unstructured Data: Unstructured data lacks a predefined structure and is often in the form of text, images, videos, or social media posts. Analyzing unstructured data requires specialized tools like natural language processing and image recognition.

Semi-Structured Data: This data type is a hybrid, featuring some structure but not as rigid as structured data. Examples include XML and JSON files commonly used in web development and NoSQL databases. Semi-structured data provides flexibility while maintaining some organization.

These categories encompass the diverse data sources that make up the landscape of big data, requiring various tools and techniques for effective management and analysis.

ROLE OF BIG DATA TESTING

The role of big data, in simple terms, is to help organizations make better decisions by collecting, processing, and analyzing large amounts of information. This data is used to uncover insights, improve operations, and gain a competitive advantage. Big data testing is essential to ensure this information is accurate, secure, and processed efficiently.

Government agencies employ it for improving public services and policy decisions. Moreover, big data underpins artificial intelligence and machine learning, enabling advancements in automation and data-driven innovation across various industries, ultimately enhancing efficiency and competitiveness.

IMPORTANCE OF BIG DATA TESTING

Big data testing is crucial because it ensures the accuracy and reliability of the massive amounts of data that organizations rely on for making important decisions. Think of it as quality control for data. Just like you want to make sure the ingredients in your recipe are fresh and correct to create a delicious dish, big data testing checks that the data is accurate, complete, and secure. It helps prevent errors and ensures that the data analysis tools and processes work correctly. Without proper testing, businesses could make flawed decisions based on faulty data, potentially leading to significant problems and losses.

BENEFITS OF BIG DATA TESTING

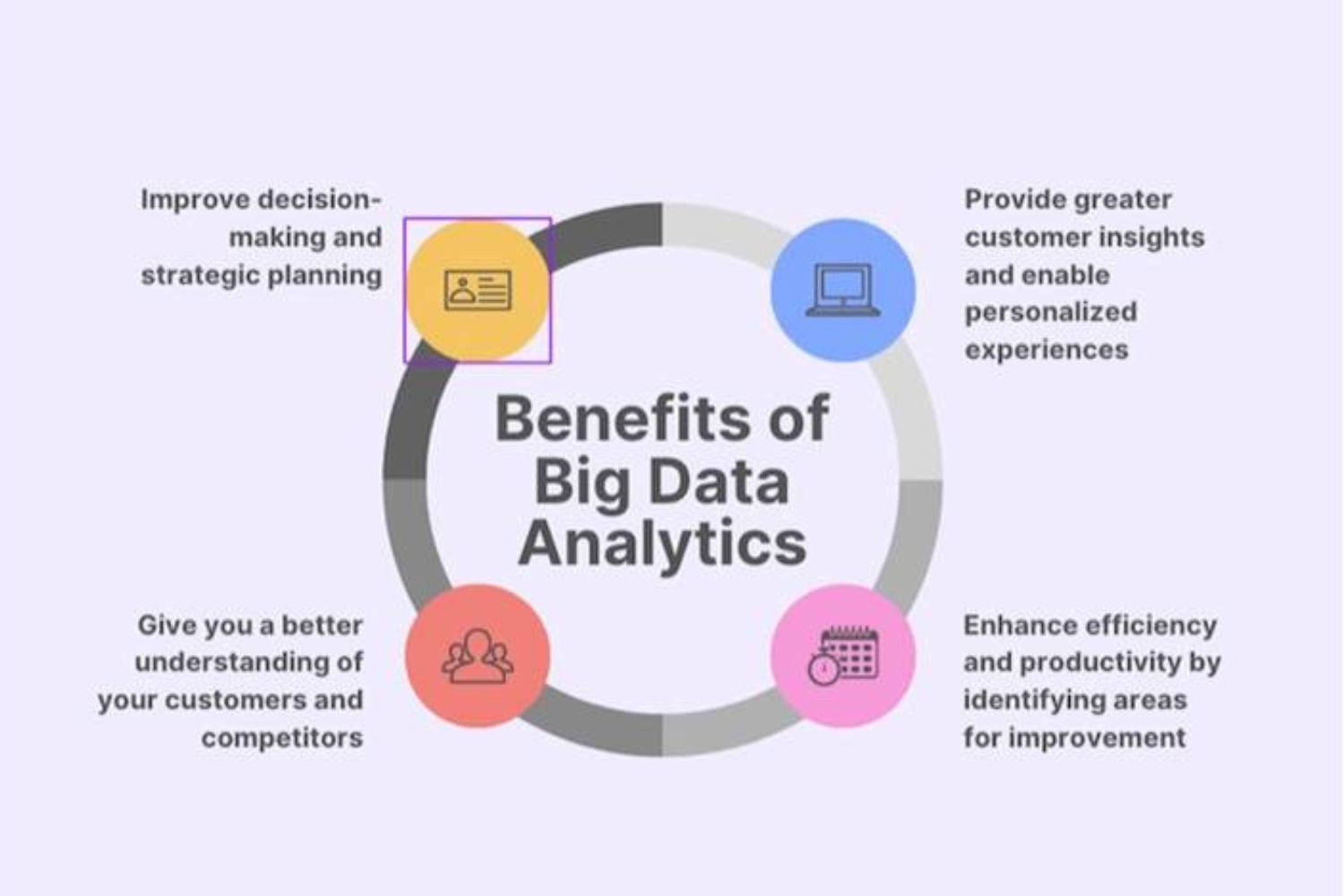

Big data offers the following simple and key benefits:

Informed Decisions: Helps organizations make smarter decisions by providing valuable insights from large datasets.

Improved Efficiency: Optimizes operations, reduces costs, and enhances productivity through data analysis.

Enhanced Customer Experience: Personalizes services and products, improving customer satisfaction and loyalty.

Competitive Advantage: Identifies market trends, customer preferences, and opportunities for staying ahead of the competition.

Innovation: Drives scientific discoveries, healthcare advancements, and better products through data-driven insights.

Risk Management: Assists in identifying and mitigating risks in various industries, from finance to cybersecurity.

JOB ROLES OF BIG DATA TESTING

1. Big Data Test Engineer

2. Big Data QA Analyst

3. Big Data Test Architect

4. Data Quality Analyst

5. Big Data Performance Tester

SALARY

The average salary ranges from approximately 13 lakhs to 35 lakhs per annum.

TECHNOLOGIES OF BIG DATA TESTING

Big data technologies are the tools and systems used to handle large volumes of data efficiently. Here are some key technologies in simple terms:

Hadoop: Think of it as a big data storage and processing system. It can store and analyze vast amounts of data across a network of computers.

Spark: It’s like a faster and more versatile version of Hadoop. Spark processes data in-memory, making it quicker for complex data analysis.

NoSQL Databases: These are like digital filing cabinets that store unstructured data like social media posts, sensor data, and more. They’re flexible and scalable.

Data Warehouses: These are like organized libraries for structured data. They store data for easy access and analysis, typically for business intelligence and reporting.

Machine Learning: It’s like teaching computers to learn from data, enabling them to make predictions, recommendations, and automate tasks.

Data Streaming: Think of it like a continuous flow of data that’s processed in real-time, perfect for applications like monitoring, fraud detection, and IoT devices.

These technologies are crucial for managing and deriving valuable insights from the massive amounts of data generated in today’s digital world.

Course Highlights

1. Suited for students, fresher’s, professionals, and corporate employees

2. Live online classes

3. 4-Month program.

4. certificate of completion

5. Decision Oriented Program of Analysis

6. Live Classes by highly experienced faculties

7. Hands-on experience with real-life case studies

CONCLUSION

In conclusion, big data is a powerful concept that involves collecting and analyzing massive amounts of information from various sources. It helps organizations make better decisions, understand trends, and gain insights. Big data can transform businesses, improve healthcare, enhance services, and more, but it also raises concerns about privacy and security. As technology advances, harnessing the potential of big data becomes increasingly important, shaping our digital world and the way we interact with it.