Machine Learning Model Testing

What is a machine learning Model Test?

In the realm of machine learning, a “model test” is a pivotal phase in the model development process. It’s the stage where the performance of a trained machine learning model is thoroughly evaluated. This evaluation assesses how well the model can make predictions on new, unseen data, which is essential for real-world applications. The model test typically involves the use of a separate dataset not used during the model’s training, mirroring the conditions it will encounter in practice.

Performance metrics specific to the problem, such as accuracy, precision, recall, or mean squared error, are calculated to gauge the model’s effectiveness. The evaluation process helps identify whether the model is accurate, robust, and can generalize to new data. If necessary, adjustments and iterations are made to enhance its performance. This critical testing phase ensures that the model meets the desired criteria for deployment in practical applications.

Here are the key components of a ML model test:

Test Data: This is a separate dataset that the model has not seen during its training phase. It’s used to evaluate the model’s performance on new, unseen examples.

Performance Metrics: These are specific measures used to assess how well the model is performing. The choice of metrics depends on the type of machine learning problem, such as accuracy, precision, recall, mean squared error, etc.

Evaluation Process: The model is applied to the test data, and its predictions are compared to the actual values in the test dataset. The chosen performance metrics are then calculated to determine how effectively the model is making predictions.

Adjustments and Iteration: Based on the test results, you may need to fine-tune the model, adjust hyperparameters, or explore different algorithms to improve its performance.

Overfitting and Generalization: Model testing helps identify issues like overfitting, ensuring that the model can generalize well to new, unseen data, rather than memorizing the training data.

Cross-Validation: In some cases, cross-validation techniques may be used to ensure robustness of the model evaluation. Cross-validation involves splitting the data into multiple subsets (folds) and performing multiple rounds of training and testing to obtain a more comprehensive assessment of the model’s performance.

How Machine Learning Model Test Works?

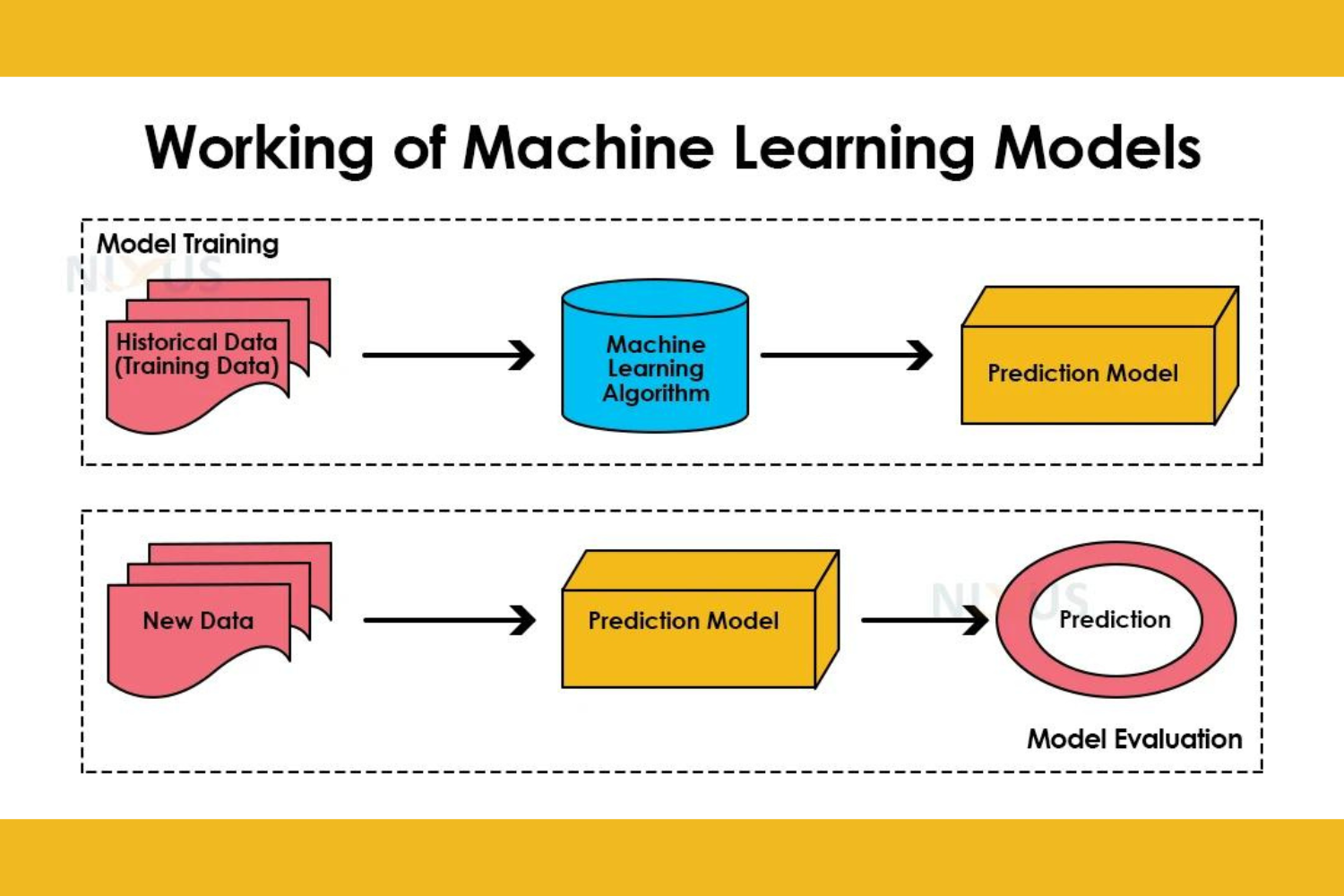

The process of testing a machine learning model involves the following steps:

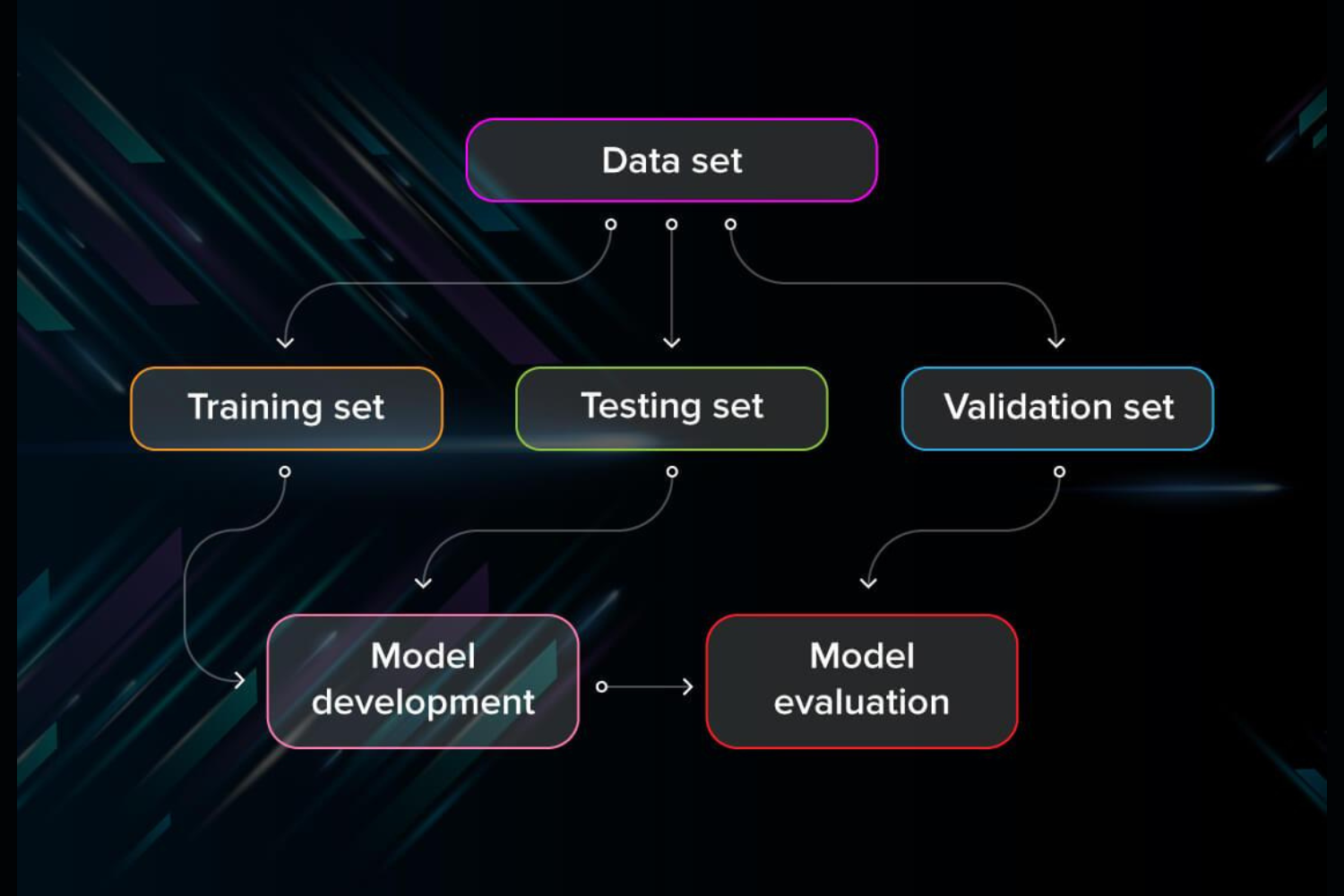

Data Splitting: The dataset is divided into two or more subsets; typically a training set and a test set. In more advanced scenarios, you may use techniques like cross-validation to split the data into multiple subsets.

Training: The machine learning model is trained on the training set. During training, the model learns the underlying patterns and relationships in the data.

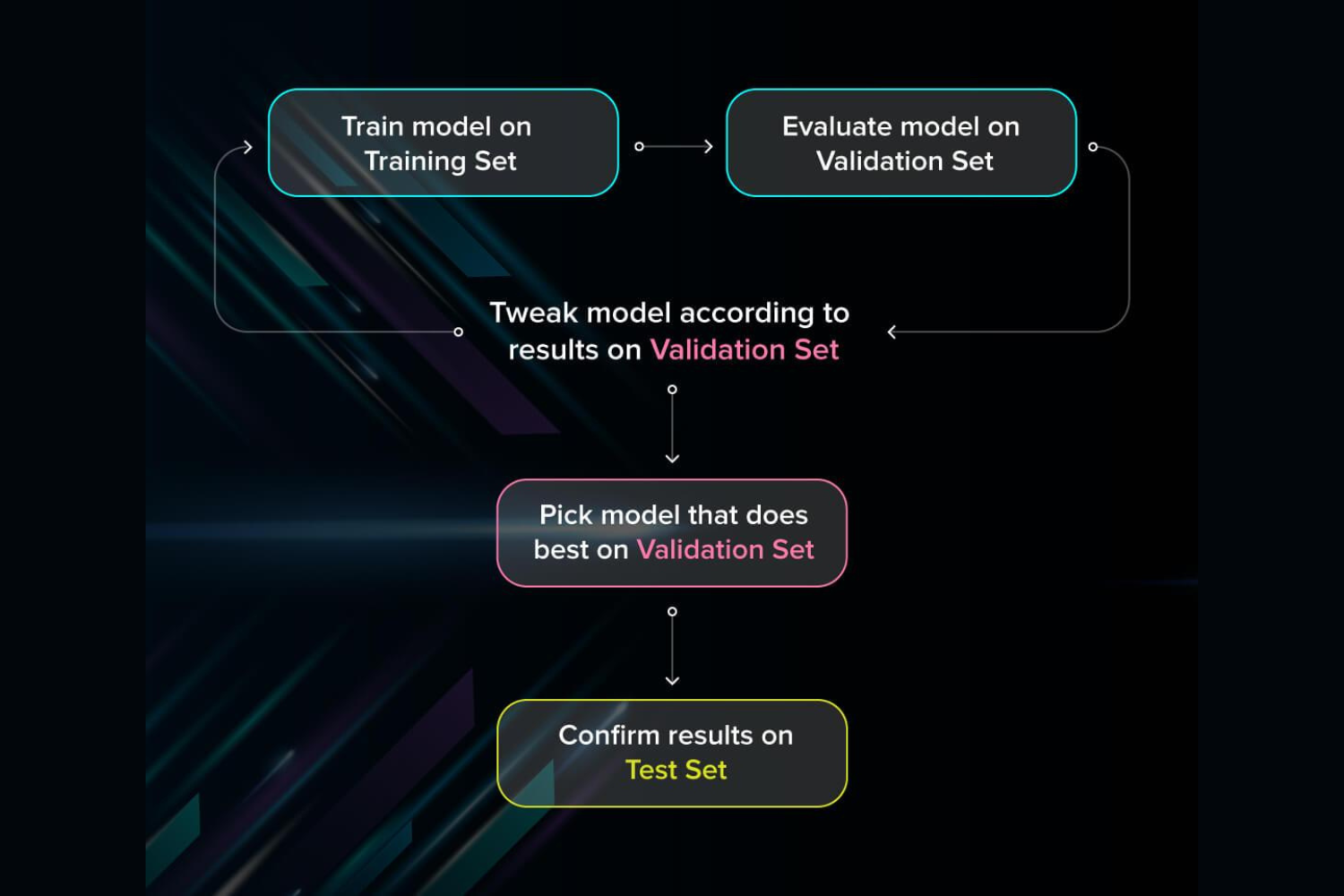

Testing: The model is then evaluated on the test set (or validation set if you have one). It makes predictions on this set, and its performance is assessed using predefined metrics, such as accuracy, precision, recall, or mean squared error.

Performance Evaluation: The model’s predictions are compared to the actual, known outcomes in the test set. The choice of performance metrics depends on the specific machine learning task (classification, regression, etc.).

Adjustment and Optimization: Based on the test results, you may need to adjust the model’s hyperparameters, choose a different algorithm, or take other corrective actions to improve its performance.

Generalization Check: It’s crucial to ensure that the model can generalize well to unseen data, meaning that it performs well in real-world scenarios beyond the training and test data. Overfitting, where the model memorizes the training data but performs poorly on new data, should be avoided.

Iterative Process: The testing and refinement process can be iterative. You may need to repeat the steps above multiple times to fine-tune the model and improve its performance.

Overall, the testing phase is a critical part of the machine learning workflow. It helps assess the model’s ability to make accurate predictions on new, unseen data and ensures its reliability in practical applications.

Role of Machine Learning Model Testing

Here are the key aspects and responsibilities associated with the role of Machine Learning Model Testing:

Data Preparation: Ensuring that the data used for testing is representative of the real-world scenario and is appropriately preprocessed to match the data the model will encounter in production.

Train-Test Split: Splitting the data into training and testing sets, where the training data is used to train the model, and the testing data is used to evaluate its performance.

Cross-Validation: Employing techniques like k-fold cross-validation to assess how well the model generalizes to new data and to mitigate issues related to data partitioning.

Model Evaluation Metrics: Selecting appropriate evaluation metrics based on the problem type (e.g., accuracy, precision, recall, F1-score, AUC-ROC, Mean Absolute Error, Mean Squared Error) to measure the model’s performance.

Hyperparameter Tuning: Experimenting with different hyperparameters to optimize the model’s performance, often using techniques like grid search or random search.

Feature Engineering: Iteratively refining the features used in the model to improve its predictive power and robustness.

Testing for Bias and Fairness: Assessing the model for biases and fairness issues, ensuring that it does not discriminate against any particular group or demographic.

Model Interpretability: Evaluating the model’s interpretability and understanding its decision-making process, especially in applications where transparency is essential.

Scalability and Performance: Ensuring that the model can handle real-world workloads and perform efficiently, especially for large-scale deployments.

Error Analysis: Identifying and understanding the types of errors the model makes and determining if they are acceptable or require further refinement.

Deployment Testing: Testing the model in the deployment environment to ensure it works as expected and integrates smoothly with other systems.

Monitoring and Maintenance: Setting up systems for continuous monitoring and retesting to ensure the model’s performance remains satisfactory over time, even as the data distribution evolves.

Documentation and Reporting: Creating comprehensive documentation on model testing procedures, results, and any issues encountered, and communicating these findings to relevant stakeholders.

Collaboration: Collaborating with data scientists, machine learning engineers, and domain experts to understand the model’s context and to gather insights that help in the testing process.

Compliance and Regulation: Ensuring that the model complies with relevant laws and regulations, especially in sensitive domains like healthcare or finance.

How Machine Learning Can Be Utilized In Software Testing

Here are several ways in which machine learning can be applied to software testing:

Test Data Generation: Machine learning algorithms can analyze the code and historical test data to automatically generate test cases and test data. This can help identify edge cases and potential vulnerabilities in the software.

Test Case Prioritization: Machine learning can help prioritize test cases based on their likelihood to uncover defects or their impact on the system. This ensures that the most critical test cases are executed first, helping to find and fix issues more quickly.

Anomaly Detection: Machine learning can be used to detect anomalies in the system’s behavior. By training models on normal system behavior, any deviations can be flagged as potential issues, helping in early defect detection.

Defect Prediction: Machine learning can predict which parts of the code are more likely to contain defects. This allows for more targeted testing efforts and can save time and resources.

Test Automation: Machine learning can assist in the automation of test script creation, maintenance, and execution. It can automatically update test scripts when the application under test changes, reducing the need for manual script maintenance.

Log Analysis: Machine learning can analyze application logs and identify patterns associated with defects. It can help in proactively detecting and diagnosing issues in the application.

Performance Testing: Machine learning can be used to simulate and predict system performance under various conditions. This can help identify potential performance bottlenecks and optimize the software accordingly.

User Interface Testing: Machine learning can assist in testing user interfaces by automating the identification of UI elements and their interactions. This helps in creating more robust UI tests.

Test Case Generation from Requirements: Machine learning can assist in automatically generating test cases from software requirements, ensuring that test coverage aligns with the intended functionality.

Regression Testing: Machine learning can help identify which areas of code are most likely to be affected by changes and prioritize regression testing accordingly.

Test Result Analysis: Machine learning can analyze test results and identify patterns in test failures, helping to quickly isolate and fix issues.

Predictive Maintenance: In a continuous integration/continuous deployment (CI/CD) environment, machine learning can predict when test environments or infrastructure might fail, allowing teams to take preventive action.

Test Environment Optimization: Machine learning can assist in optimizing test environments, resource allocation, and test execution schedules to ensure efficient use of resources.

Code Review and Static Analysis: Machine learning can assist in identifying code quality issues and security vulnerabilities during code reviews, thereby preventing issues from reaching the testing phase.

Why is ML Model Testing Important?

Machine learning systems are driven by statistics and are expected to make independent decisions. Systems that churn out valid decisions need to be tested for the demands of the target environment and user expectations. Good ML testing strategies aim to reveal any potential issues with design, model selection, and programming to ensure reliable functioning. While rife with challenges, ML testing also offers these advantages.

1. The training-testing data determines the model behavior in machine learning, a data-driven programming domain. ML testing can also reveal data inconsistencies, and the problems with the data could include

2. Inaccurate or biased labels

3. Disparity between test and training sets of data

4. Existence of tainted data

5. Inaccurate post-deployment data assumption

6. An improperly designed framework may give rise to problems, particularly if scalability requirements were disregarded, which ML testing can detect.

7. The learning program, which forms the basis of the machine learning system, may be either custom code or a legacy component of the framework. Model testing can detect flaws in the learning programs that implement, set up, and manage the machine learning system.

What Are The Different Types Of ML Model Testing?

Different types of machine learning model testing include :

1. Manual Error Analysis:

Involves the manual inspection of the model’s errors to gain insights into its failures.

Helps identify patterns, common failure modes, and areas for improvement.

Provides qualitative analysis to enhance the model’s performance.

.

2. Naive Single Prediction Tests:

A straightforward method to assess a model’s ability to make individual predictions.

Provides a quick initial check of model performance but has limitations due to probabilistic outputs

3 Minimum Functionality Test:

Focuses on specific components or functionalities of the ML system rather than evaluating the entire model.

Useful for complex models where each component’s correctness is critical.

4 Invariance Tests:

Concentrates on evaluating how a model behaves when the input data undergoes variations.

Helps determine whether the model is robust to changes in input data, which is crucial for real-world reliability.

These testing methods help ensure that machine learning models not only perform well but also exhibit robustness and reliability in diverse real-world scenarios.

What are the best tools for Machine Learning Model Testing?

Here are some of the best tools for simple machine learning model testing:

1.Scikit-Learn: A widely-used Python library for machine learning that offers simple and effective tools for model evaluation and testing.

2.TensorFlow and PyTorch: Popular deep learning frameworks that provide built-in functions for model evaluation and testing, along with visualization tools like TensorBoard.

3.MLflow: A tool for managing the machine learning lifecycle, including testing and tracking experiments.

4.AutoML Tools:AutoML frameworks like Google AutoML and H2O.ai, which simplify model selection, tuning, and evaluation.

5.Hyperopt: A Python library for hyperparameter optimization, making it easier to find the best model configurations.

6.Unit Testing:Traditional unit testing frameworks such as Python’s unittest or pytest to ensure your code and model components work correctly.

Course Highlights / Details:

1- Suited for students, freshers, professionals, and corporate employees

2- Live online classes

3- 4-month program

4- Certificate of completion

5- Decision Oriented Program of Analysis

6- Live Classes by highly experienced faculties

7- Hands-on experience with real-life case studies

Salary Package:

Machine Learning Test Engineer salary in India ranges between ₹ 13LPA-35LPA.

Conclusion:

Conducting ML testing is an essential step for ensuring the quality and reliability of a machine learning model. ML testing is distinctive in several ways: it necessitates evaluating both data quality and model performance, and it often involves multiple iterations of hyperparameter tuning to achieve optimal results. When these crucial procedures are diligently followed, you can have a high level of confidence in the model’s performance.